Artificial Intelligence is no longer a technical term for enterprises that strive for winning business strategies in the hypercompetitive technological ecosystem. AI models hold immense potential for revolutionizing various industries with their mastery of natural language processing and capabilities of automating complex business functions. While AI has witnessed such popularity among enterprise AI strategies, optimizing AI models through fine-tuning for specific tasks is significant to drive the anticipated success in the expected timeframe.

The systematic optimization of AI models stands as the foundation to achieve enhanced performance and peak efficacy. Two primary optimization methodologies have emerged as prominent strategies: Fine Tuning and Prompt Engineering to ensure optimal performance.

Table of Contents

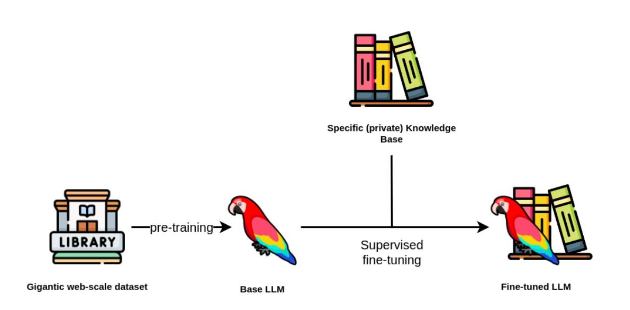

Definition of Fine-tuning

Fine-tuning of AI is the systematic process of training pre-trained AI models and LLMs on big data sets and enabling them to perform specific tasks. Predominantly pre-trained models are trained on general data and a vast knowledge base for generating results. But when you fine-tune such an AI model with existing data relevant to your domain, you can allow models for targeted adjustments to perform customized tasks and generate domain-specific information for end users. It will meticulously adjust the internal parameters of the AI model and empower them to excel at specific tasks.

For example, a pre-trained chatbot for e-commerce websites is fine-tuned differently from another AI chatbot developed for a medical facility online platform. Unlike training from scratch, fine-tuning builds upon existing knowledge with less computational resources.

Process of Fine-tuning

The model adapts the capability of learned representations to better suit the targeted tasks through iterative adjustments to its parameters. This process involves specialized algorithms and techniques tailored to maximize performance on the specific task at hand. And there are several steps to accomplish that.

- Selection of pre-trained model: The technical process of fine-tuning begins with the careful selection of the pre-trained model. This step lays the groundwork for successful fine-tuning.

- Identification of target task and defining the goals of fine-tuning: This step involves defining the scope and objective of fine-tuning. Plan the target task and domains for which the model needs optimization.

- Preparation of task-specific data: Curate datasets relevant to the target task and ensure the quality and representativeness of data to facilitate effective fine-tuning. The data should undergo preprocessing, cleaning, normalization, extraction, and storage for the model.

- Configuration of fine-tuning parameters: This configuration phase includes modifying hyperparameters, such as learning rates, batch size, algorithms, weight decays, and loss functions, to optimize its performance for specific tasks.

- Training and iterative refinement: The model enters the training phase with configured model architecture and parameters. Iterative refinement techniques, such as early stopping and learning rate scheduling, help optimize training dynamics and prevent overfitting.

- Evaluation and validation: This step involves evaluation and validation of accuracy, precision, robustness, fitting errors, and all other metrics that guide insights into the model’s efficacy.

- Fine-tuning and Deployment: Multiple iterations of adjustments, training, evaluation, and validations will be conducted to attain satisfactory performance metrics. Once satisfactory performance metrics are attained, the fine-tuned model is ready for deployment in real-world applications.

Challenges of Fine-tuning

Fine-tuning possesses several challenges that must be considered to ensure the faultless efficacy of AI models. The 7 key challenges are:

- Overfitting

- Catastrophic forgetting

- High computational resources

- Data Scarcity

- Domain Shift

- Ethical Considerations

- Hyperparameter Selection and Tuning

Definition of Prompt Engineering

Prompt engineering is the creation of specialized instructions or prompts that will guide AI models to generate desired results. In contrast to fine-tuning, prompt engineering focuses on framing the inputs provided to the model. The capability of processing tasks by AI is completely influenced by the formulation of prompts.

Prompts will guide the AI models toward generating human-quality texts, translating languages, writing diverse content, processing videos, creating images, and lots more multiple tasks. Unlike fine-tuning, the resource requirement for prompt engineering is insignificant. At its core, prompt engineering seeks to optimize communication and interaction by leveraging linguistic, psychological, and technological principles.

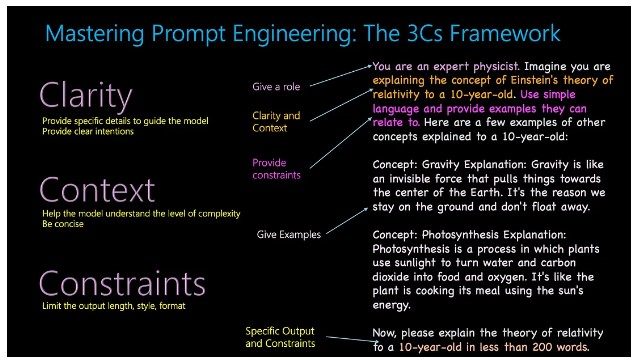

Formulating Prompts – 3Cs Concept (Clarity, Context, Constraints)

Formulating the right prompts that will enable the AI model to generate the desired outcome is engineering and expertise. Effective and smart prompt engineering revolves around 3C concepts: clarity, context, and constraints.

Clarity

Clarity ensures the prompts are clear to the AI models. Clear prompts avoid ambiguity, confusion, and dilemma on the expected outcomes. These prompts will instruct the models precisely and format the output to attain the level of standards we expect.

Context

Prompts should be framed within a relevant meaningful backdrop. The prompt should provide the LLM with relevant background information about the task and the domain. The context offers depth and significance to the prompt allowing models to act right on the requirement of process. By developing context around situational, historical, and thematic relevance, prompts can enhance comprehension and engagement.

Constraints

Constraints of prompt define the boundaries and limitations within the LLMs response generates. This involves mentioning the desired tone, length, specific information, and multiple other metrics that shape the response.

Mastering In-context Learning with the 3C Framework. Image source.

Fine-Tuning vs Prompt Engineering

| Aspect | Fine-Tuning | Prompt Engineering |

| Definition | Fine-tuning involves updating the parameters of a pre-trained model by training it on task-specific data. | Prompt engineering focuses on designing specialized prompts or input formats that guide the pre-trained model to produce desired outputs for specific tasks. |

| Scope | Involves training the entire model with task-specific data, allowing the model to learn task-specific patterns and features. | Focuses on modifying the input format or providing task-specific cues to the pre-trained model while keeping the model parameters fixed. |

| Training Data | Requires a significant amount of task-specific labeled data for effective fine-tuning. | Relies on designing prompts or input formats based on the task requirements, often without the need for additional labeled data. |

| Model Updates | Updates model parameters during training, potentially leading to overfitting on the task-specific data. | Does not update the model parameters, thus preserving the generalization capabilities of the pre-trained model. |

| Expertise | Requires expertise in machine learning and LLM architectures | Requires understanding of language and prompt crafting |

| Adaptability | Generally applicable to a wide range of tasks but may require substantial computational resources and time for fine-tuning. | More flexible and efficient for rapidly adapting pre-trained models to new tasks or domains, as it does not involve extensive retraining. |

| Performance Trade-off | Can achieve high performance on specific tasks with sufficient fine-tuning but may suffer from reduced generalization if the task differs significantly from the pre-training data. | Offers a balance between task-specific performance and model generalization, as it leverages the pre-trained model’s knowledge while providing task-specific guidance. |

| Resource Requirements | Requires access to large-scale computational resources for fine-tuning and may involve trial-and-error experimentation to optimize hyperparameters. | Typically requires less computational resources compared to fine-tuning, making it more accessible for rapid prototyping and experimentation. |

| Use Cases | Commonly used in scenarios where high task-specific performance is crucial, such as sentiment analysis, text classification, or language translation. | Suitable for tasks where task-specific guidance or control over the model’s behavior is essential, such as text generation with specific attributes or structured output generation. |

| Cost | Potentially expensive due to computational needs and data acquisition | Generally, less expensive, can be free with pre-trained models |

Frequently Asked Questions about Fine-Tuning vs Prompt Engineering

- Is fine-tuning the same as prompt engineering?

Fine-tuning and prompt engineering are related but not identical. Fine-tuning generally involves adjusting a pre-trained model on specific tasks or datasets, while prompt engineering refers to crafting effective prompts or instructions to control the behavior of a language model. Fine-tuning typically involves more extensive adjustments to model parameters, while prompt engineering focuses on the input text to achieve desired outputs.

- When is it best to use prompt engineering vs fine-tuning?

Prompt engineering is best when you have a clear understanding of the task and dataset but need to optimize the language model’s responses for a specific use case or domain. Fine-tuning, on the other hand, is preferable when you have a substantial dataset and want the model to learn nuanced patterns and details specific to your task, which can lead to better performance but requires more computational resources and data.

- What is fine-tuning in large language models?

Fine-tuning in large language models refers to the process of customizing a pre-trained model for a specific task or domain by further training it on task-specific data. This helps the model adapt its parameters to better understand and generate content relevant to the target task, improving performance and accuracy.

Final thoughts

Both fine-tuning and prompt engineering are two different methods for AI model optimization to generate specific outcomes based on the requirements relevant to the domain. Each strategy has advantages and disadvantages, making them appropriate for a variety of tasks and applications. Understanding these variances is critical for determining the best optimization method for a given scenario.

Experience AI’s ability to generate innovation and efficiency in your firm!

Contact Heliosz.AI today for skilled AI consulting services tailored to your requirements. From fine-tuning existing models to creating sophisticated AI applications, our team is ready to assist you in achieving your objectives. Contact us right now to take the next step in realizing the full potential of artificial intelligence in your firm.