2024 is a year where you are going to start witnessing the data explosion of more than zettabytes across various datasets. The surged volume, velocity, and multiple forms of data generated every minute pose significant challenges for organizations that want to reap the potential of data effectively. Data pipeline becomes an indispensable strategy in this situation, driving a systematic approach to source, process, load, and store data into its goal. With evolving needs and the number of data pipeline tools in the data industry, it is a crucial decision to make to choose the right data pipeline tools for efficient data management.

This article will take you through,

- What is a data pipeline?

- What are the top data pipeline tools

- Business challenges do data pipeline tools overcome

- Factors to consider while choosing a data pipeline tool

- How Heliosz.ai harnesses the power of popular data pipeline tools according to your business requirements

What is a Data Pipeline?

A data pipeline is an organized and effective collection of procedures, instruments, and technology designed to accelerate the flow of data from its source to its destination. Envision a system of interconnected pipes carrying data through several phases that go through validations, transformations, and cleaning procedures before being stored or sent, depending on the type of data.

A data pipeline is precisely what it sounds like. Making well-informed decisions that accelerate corporate success depends on an organization’s data pipelines being managed effectively and strategically.

What are the Top Data Pipeline tools?

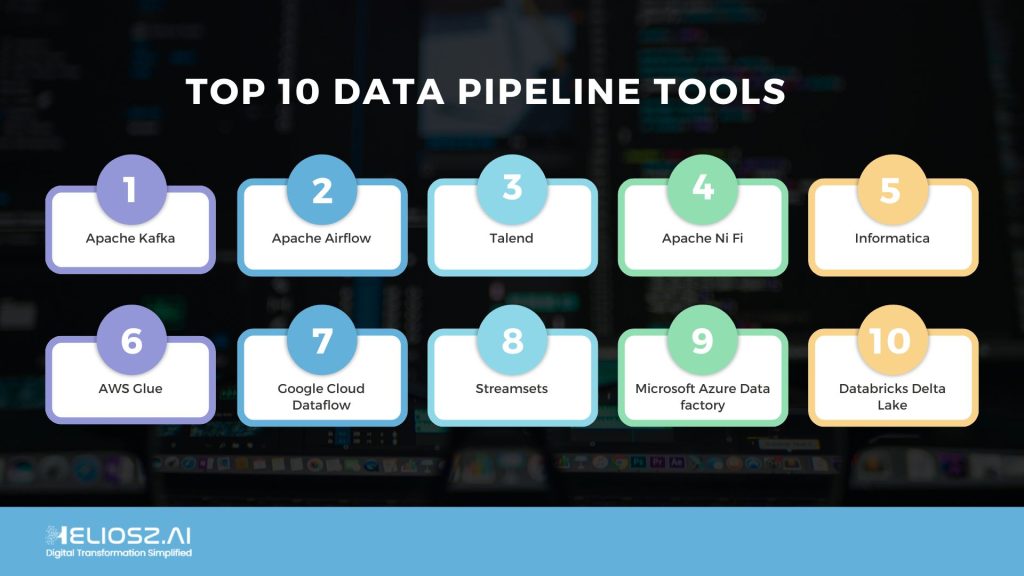

With a plethora of data pipeline tools trending in the market, selecting one from that long list will be a challenging task for any organization. We have curated a list of the top 10 data pipeline tools here. Look at it closely!

1. Apache Kafka

Apache Kafka has developed and positioned itself as the leading data pipeline tool for its high throughput, scalability capabilities, flexibility, fault tolerance, and more. This tool acts as the centralized and integrated technique for real-time data streams, ensuring smooth collaboration across various systems.

2. Apache Airflow

Apache Airflow is a data pipeline platform that excels in handling complex data flows in a data infrastructure. It demonstrates the rich library of pre-built operations and dynamic DAG (Direct Acrylic Graph) scheduling expertise. This data pipeline tool empowers organizations to automate the data flows, monitor data responsibility and stewardship, and manage the data accounts efficiently.

Features of Apache Airflow:

- Most scalable & dynamic

- Known for its flexibility and ability to handle complex workflows

- Airflow is a popular choice for enterprise-level data pipelines

3. Talend

Talend data pipeline tool collaborates with the processes of data management, data governance, data quality management, data integration, and all other data processes in a unified platform. The user-friendly interface and wide library of pre-built data connectors are popular features of Talend.

Talend simplifies the process of designing, deploying, and managing data pipelines across heterogeneous environments. This tool supports real-time data processing and cloud-based deployment options. This specialty makes this tool well-fitted for modern data architectures.

Features of Talend:

- A well-rounded platform

- Provides a visual interface for designing data pipelines

- Data integration and management capabilities

4. Apache NiFi

Apache NiFi is an open-source data pipeline tool that is developed to ease the process of data collection, data routing, transformation, and data loading in real-time. The intuitive graphical interface enables users to manage any level of complexity in data flows.

It is built with data provenance features that ensure end-to-end visibility and traceability. This data pipeline tool is easily integrated with various data sources and data destinations, making it a versatile choice for multiple industries.

Features of Apache NiFi:

- Easiest to use

- User-friendly drag-and-drop interface

- NiFi is a good option for beginners or for building simpler data pipelines

5. Informatica

With a range of products designed to meet different needs, Informatica is a leading data pipeline tool with enterprise data management solutions capabilities. Informatica PowerCenter, the company’s flagship solution, offers a wide range of powerful data integration features, such as batch and real-time data processing, data quality management, and many more.

With cutting-edge capabilities like lineage tracing and AI-powered data matching, Informatica enables companies to achieve data-driven excellence.

Features of Informatica:

- An enterprise-grade solution.

- Offers a comprehensive suite of features for complex data integration tasks.

6. AWS Glue

AWS Glue is a trending data pipeline tool that offers fully managed extract, transform, and load (ETL) functions from Amazon Web Services (AWS). AWS Glue simplifies the process of developing and managing large-scale data pipelines by leveraging serverless computing.

It is an efficient and effective alternative for cloud-based organizations due to its pay-as-you-go pricing structure and ease of integration with other AWS services. Glue supports batch and streaming data processing, allowing companies to gain real-time, actionable insights from their data.

Features of AWS Glue:

- Ideal for Cloud Data Management for AWS Ecosystem.

- Designed specifically for AWS environments.

- Data Pipeline integrates seamlessly with other AWS services for a cohesive workflow.

7. Google Cloud Dataflow

With Google Cloud Dataflow, businesses can easily create and implement data pipelines thanks to its fully managed stream and batch processing service. With Dataflow’s unified programming approach for batch and streaming data processing, users may create data pipelines only once and execute them from any location. Dataflow is based on Apache Beam.

For companies looking for flexibility and efficiency in their data processing workflows, its serverless architecture, integrated monitoring, and auto-scaling features make it a desirable choice.

Features of Google Cloud Dataflow:

- Real-time AI Capabilities.

- A serverless option from Google Cloud.

- Dataflow excels at handling real-time data streams.

- Incorporating AI and machine learning tasks within the pipeline.

8. Streamsets

The cutting-edge data integration platform StreamSets aims to make the process of building, implementing, and managing data pipelines in any scenario easier. StreamSets visual design interface and library of pre-built data connectors enable organizations to ingest, process, and transfer data in real time without having to write code.

Because of its robust error handling, data drift detection, and automatic schema evolution characteristics, it is ideal for use cases requiring resilient and agile data integration.

Features of Streamsets:

- Ideal for Multi-Cloud Environments.

- StreamSets excels at building data pipelines for complex, multi-cloud architectures.

9. Microsoft Azure Data Factory

Create, plan, and coordinate data pipelines across hybrid and multi-cloud settings with Microsoft Azure Data Factory, a cloud-based data integration solution. Data Factory provides unmatched flexibility and interoperability with support for more than 90 native connectors and connections with Azure services like Azure Synapse Analytics and Azure Databricks.

Users with different levels of technical competence can use it because of its drag-and-drop capability and visual interface, while additional capabilities like data lineage and monitoring guarantee data integrity and compliance.

Features of Microsoft Azure Data Factory:

- Cloud-based ETL and data integration service.

- Azure Data Factory offers a powerful and versatile service for building data pipelines within the Microsoft Azure cloud ecosystem.

10. Databricks Delta Lake

Databricks Delta Lake is another data pipeline tool that is popular in the data industry with its open-source storage layer. This feature brings reliability, consistency, performance, and scalability to data lakes. Databricks Delta Lake provides ACID transactions, scalable metadata management, and data processing capabilities.

This tool has proven to be most suitable for dealing with large-scale data pipelines. With support for both batch and streaming data processing, Delta Lake simplifies the process of building real-time analytics applications and machine learning pipelines on top of existing data lakes.

Business challenges do Data Pipeline tools overcome

Data pipeline tools address multiple challenges that are pertaining within an organization while handling huge volumes of data. Some of the challenges that a best data pipeline tool will face and help overcome are:

Data Silos (Unstructured data and scattered data systems)

Data silos are considered the biggest challenge when it comes to an organization. Data will remain in scattered systems and formats, leading to siloed information that will intervene in collaboration and interoperability within an organization. Data pipeline tools ease the integration of data silos from various sources, analyze data silos, and empower proper data management.

Data Quality issues (inconsistencies, errors, etc.)

Data quality reigns over the decision-making processes in an organization. The poor-quality data hinders accountability, reliability, and consistency of data analytics and consequently leads to misguided decisions. A top data pipeline tool will enable the process of data validation, data cleansing, and data corrections, and ensure only high-quality data is moving forward for further analytical stages.

Scalability challenges using Data Pipeline tools

Data volume is continuing to grow exponentially, and organizations should buckle up for an unanticipated data load across their business operations. However, a well-developed data pipeline is well-equipped with the capabilities of handling a gigantic volume of data. They are built with the features of parallel data processing, distributed computing, auto-scaling, and more.

6 Factors to Consider While Choosing Data Pipeline Tools

While purchasing a data pipeline tool for the data management of your organization and leveraging it as a long-term investment for sustainable ROI, consider these factors.

Scalability and flexibility in Data Pipeline tools

Organizations are susceptible to growth in unexpected ways and the data volume cannot always be forecasted accurately. So, it is vital to buy a data pipeline tool that can handle any surge of data volume and can accommodate any business growth needs. The data pipeline tool that you are planning to buy should be flexible enough to work with different data formats, data sources, data destinations, etc.

Ease of use

Evaluate data pipeline tools’ user interface features and user experience functionalities which will be simple for your in-house teams to work with. Finding a data pipeline tool that is easy to use will enhance the speed of adoption and usability across your organization.

Integration and deployment of Data Pipeline tools

Consider a tool that has multiple deployment options available and can be integrated with your existing infrastructure effortlessly. This tool should be compatible with on-premise, cloud-based, or hybrid deployment choices. Also, ensure that the data pipeline tool that you choose will facilitate integration with all your systems, applications, cloud stores, etc. for seamless data flow and collaborations.

Data security features

Data security remains the top-most priority for organizations. Data pipeline tools should be able to control unauthorized access and encrypt data using advanced data security measures.

Pricing structure of Data Pipeline tools

Consider the pricing of the tool that can adhere to your budgetary constraints. The license fee, training costs, infrastructure needs, maintenance costs, and all other direct and indirect costs should be analyzed clearly before choosing one data pipeline tool.

Conclusion

Multiple data pipeline tools are available in the realm of data science and data engineering to streamline the process of an organization efficiently. But choosing the right one and implementing and integrating it with your organization’s data infrastructure is the topmost priority.

We have handpicked the top 10 best data pipeline tools for you with our technical expertise in data pipeline tools in this article. Analyze the overall cost, features, functionalities, and all other capabilities will ensure you are purchasing the right data pipeline tool and will maximize the ROI in the long run.

How Heliosz.ai harnesses the power of popular Data Pipeline tools according to your business requirements

Heliosz.ai is an innovative and unique data solution platform where you can utilize the power of popular data pipeline tools to manage the entire business data process. Our expert team will carefully access your data infrastructure, business processing needs, expected outcomes, main goals, and business growth plans and identify the most suitable data pipeline tool from our multiple technology partners such as Apache Kafka.

Heliosz will ensure the tool is completely aligned with your business objectives. Through meticulous integration, configuration, and optimization, Heliosz.ai will take the responsibility of being the trusted data solution partner to propel your organization toward data-driven excellence.